We’ve tried and rejected many numerical approaches to differentiation. Richard Harris has one final attempt.

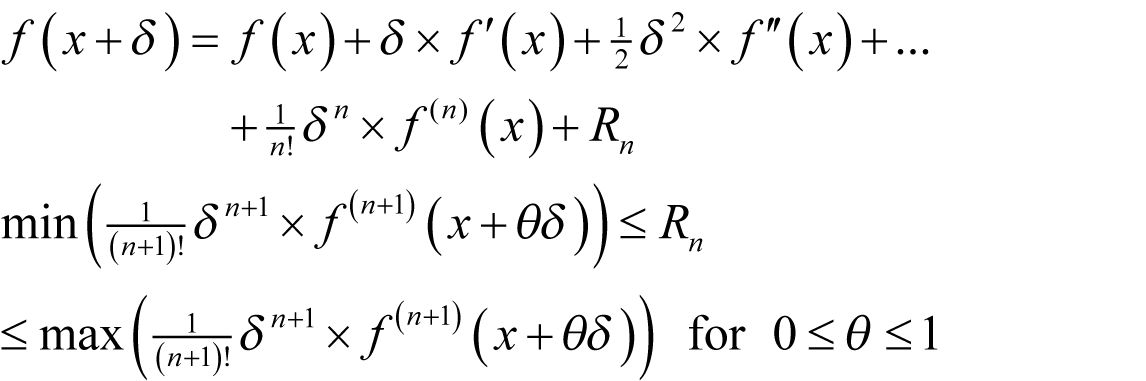

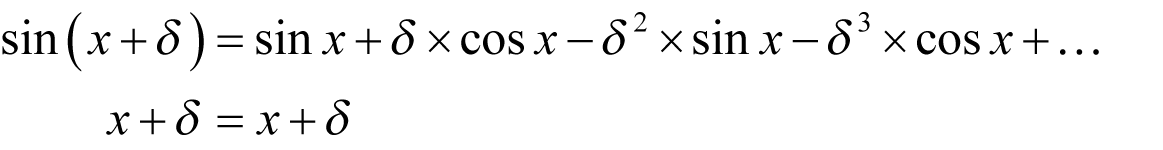

At the beginning of the second half of this series we briefly covered the history of the differential calculus and described the incredibly useful Taylor’s theorem, which states that for a function f

where f' ( x ) denotes the first derivative of f at x , f" ( x ) denotes the second and f ( n )( x ) the n th and where, by convention, the 0 th derivative is the function itself.

We have so far used it to perform a full error analysis of finite difference algorithms and to automate the calculation of their terms with polynomial curve fitting.

We went on to describe the far more accurate polynomial approximation of Ridders’ algorithm [ Ridders82 ] which treated the symmetric finite difference as a function of the difference δ .

In the previous article we used expression objects to represent functions as expression trees, from which it was possible to compute expression representing their derivative by applying the relatively simple algebraic rules of differentiation.

Noting that there was little we could do to control cancellation error in the unknown expression for the derivative, assuming we hadn’t the appetite to implement a full blown computer algebra system that is, we concluded by combining this with interval arithmetic to automatically keep track of such numerical errors.

Having claimed that short of just such a system our algorithm was as accurate as it was possible to get, you may be a little surprised that there is anything left to discuss.

However, as I also noted, expression objects are fairly memory intensive due to the need to maintain the expression tree. Furthermore, a naïve implementation such as ours is computationally expensive since it puts cheap built in arithmetic operations behind relatively expensive virtual function calls. The latter point isn’t particularly compelling since we can in many cases remove the virtual functions by instead using templates.

Finally, we must take care when using expression objects in conjunction with numerical approximations. As was demonstrated, it is quite possible to accurately approximate one function with another whose derivative is significantly different.

Whilst expression objects provide a powerful method for the calculation of derivatives, we should ideally seek an equally accurate algorithm that doesn’t require as much memory and uses no virtual functions or heavily nested template types.

The question stands as to whether such an algorithm exists.

Given that I’m still writing, it should be more or less self-evident that it does.

And, in another testament to its tremendous power, it’s based entirely upon Taylor’s theorem.

Surreal numbers

Like Robinson’s non-standard numbers [ Robinson74 ], Conway’s surreal numbers [ Knuth74 ] extend the reals in a way that admits a rigorous definition of infinitesimals.

So what’s to stop us from actually using infinitesimals in our calculations?

Nothing. That’s what!

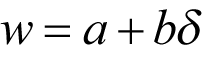

Much as we extend the reals to the complex numbers by representing numbers with both a real and an imaginary part, we can extend the reals to the surreals by representing numbers with both a real and an infinitesimal part.

Note that we don’t actually need to define δ so long as it stands for the same infinitesimal throughout our calculations.

Listing 1 gives a C++ definition of just such a numeric type.

class surreal

{

public:

surreal();

surreal(double a);

surreal(double a, double b);

double real() const;

double inf() const;

int compare(const surreal &w) const;

surreal & negate();

surreal & operator+=(const surreal &w);

surreal & operator-=(const surreal &w);

surreal & operator*=(const surreal &w);

surreal & operator/=(const surreal &w);

private:

double a_;

double b_;

};

|

| Listing 1 |

Listing 2 provides the constructors and property accessors of the

surreal

class.

surreal::surreal() : a_(0.0), b_(0.0)

{

}

surreal::surreal(double a) : a_(a), b_(0.0)

{

}

surreal::surreal(double a, double b) : a_(a),

b_(b)

{

}

double

surreal::real() const

{

return a_;

}

double

surreal::inf() const

{

return b_;

}

|

| Listing 2 |

The

compare

member function first compares the real part of the number and, if they are equal, then compares the infinitesimal part, as shown in listing 3.

int surreal::compare(const surreal &w) const

{

if(a_<w.a_) return -1;

if(a_>w.a_) return 1;

if(b_<w.b_) return -1;

if(b_>w.b_) return 1;

return 0;

}

|

| Listing 3 |

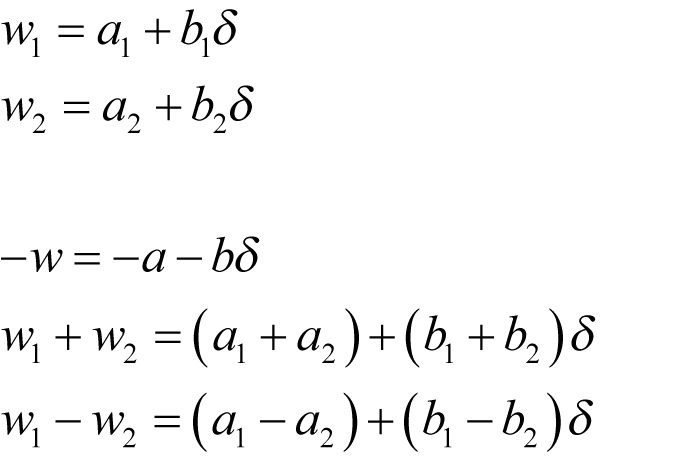

Negation, addition and subtraction are trivial operations in which we perform the same action on both parts of the number

The implementation of these operations is given in listing 4.

surreal &

surreal::negate()

{

a_ = -a_;

b_ = -b_;

return *this;

}

surreal &

surreal::operator+=(const surreal &w)

{

a_ += w.a_;

b_ += w.b_;

return *this;

}

surreal &

surreal::operator-=(const surreal &w)

{

a_ -= w.a_;

b_ -= w.b_;

return *this;

}

|

| Listing 4 |

Multiplication proceeds much as it does for complex numbers, although we shall discard the term involving the square of δ .

Listing 5 provides the implementation of the multiplication operator.

surreal &

surreal::operator*=(const surreal &w)

{

b_ = a_*w.b_ + w.a_*b_;

a_ *= w.a_;

return *this;

}

|

| Listing 5 |

Division would seem to be a slightly trickier proposition; we’re ignoring second and higher order powers of δ . If the product of two of our surreal number has a second order term, surely we’ll need it when dividing the product by one of the arguments if we are to have any chance of recovering the other. Or of recovering the correct result when dividing it by any other surreal number, for that matter.

Well, appearances can be deceptive.

Surreal number division

The trick is one we’re going to exploit time and again when implementing arithmetic operations for this type; no matter what real number we multiply δ by, it’s still infinitesimal, which means that we can still use Taylor’s theorem!

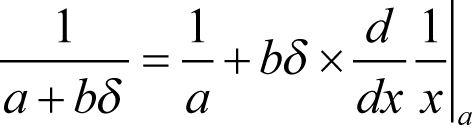

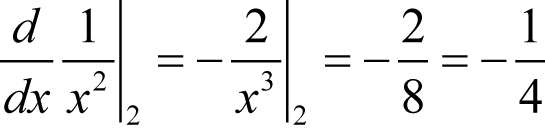

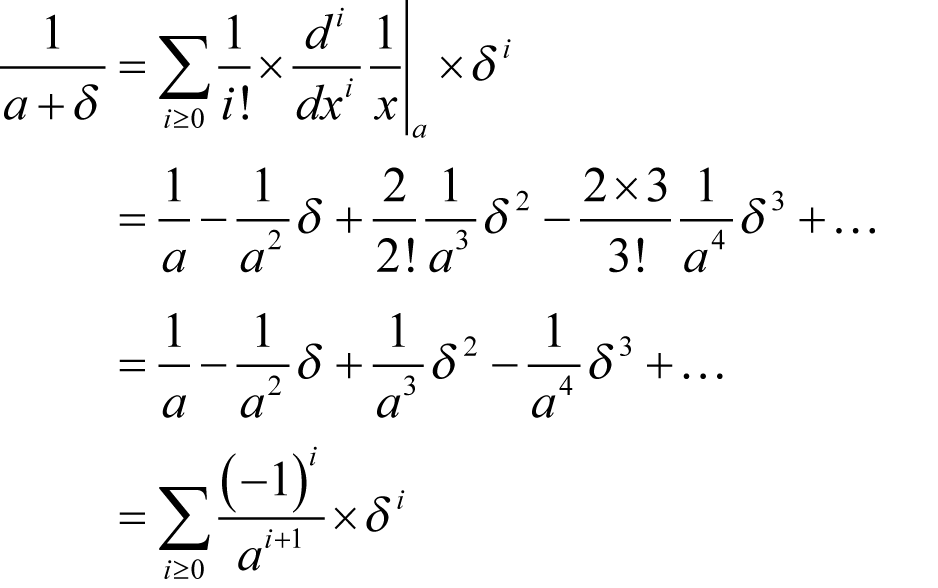

What we shall therefore do is work out the Taylor series expansion of reciprocation to first order. We can then multiply the numerator by the reciprocal of the denominator. The required series for the reciprocal is given by

where the vertical bar means ‘evaluated at the subscript’.

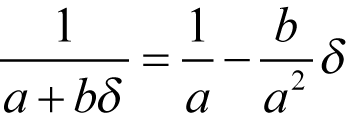

Hence our rule for reciprocation is

Note that this trick is effectively the chain rule for our

surreal

type in that it allows us to calculate the derivative of an expression in which one function is applied to the result of another.

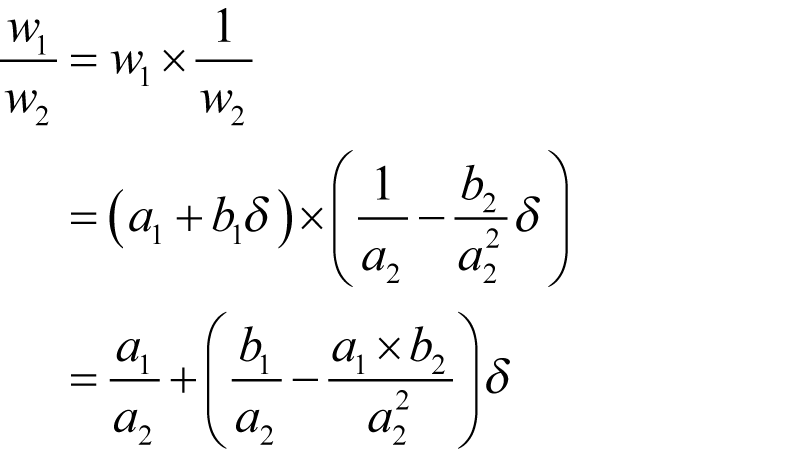

The rule for division is therefore given by

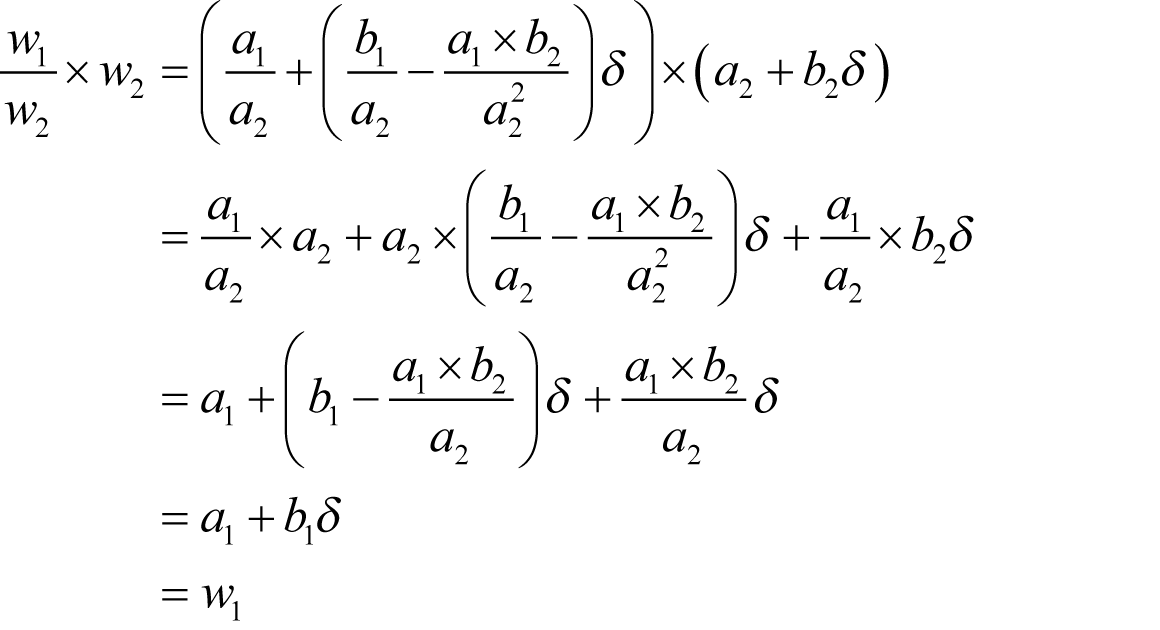

Now something about this sidestepping of the need to retain higher order infinitesimals seems a little bit fishy. To be truly comfortable that I haven’t done something silly we should probably check that multiplying the ratio of w 1 and w 2 by w 2 yields w 1 as expected.

Well, it’s clear that my reservations were undeserved; I really should have had more faith in Taylor’s remarkable result. We can clearly use this formula with confidence and the implementation in listing 6 does just that.

surreal &

surreal::operator/=(const surreal &w)

{

b_ = b_/w.a_ - a_*w.b_/(w.a_*w.a_);

a_ /= w.a_;

return *this;

}

|

| Listing 6 |

Whither algorithm?

Now this consistent extension of real arithmetic is all well and good, but how does it help us to develop a machine precision accurate algorithm for computing derivatives?

Well, the answer, perhaps a little unsurprisingly, lies in Taylor’s theorem.

The

surreal

result of a function is a first order Taylor series expansion about an infinitesimal deviation from the value of that function. By Taylor’s theorem, the coefficient by which

δ

is multiplied in the result of a function is therefore equal to its first derivative.

We can therefore trivially recover the derivative of a calculation by calling the

inf

member function on its result.

For example consider

Using our

surreal

type we can simply divide 1 by the square of an infinitesimal deviation from 2

Clearly the coefficient of the infinitesimal part is equal to the required derivative.

This use of the Taylor series to compute derivatives is known as automatic differentiation, as the title of this article might have hinted at.

Further arithmetic operations

Before we can use our

surreal

type to compute the derivatives of functions in general, we shall need to implement the full suite of C++ arithmetic operations.

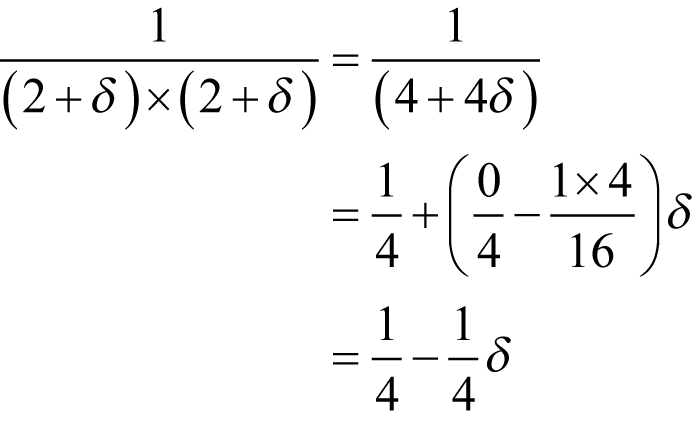

This would take up rather a lot of time, so we shall content ourselves with a few pertinent examples. Specifically

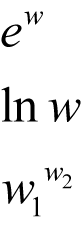

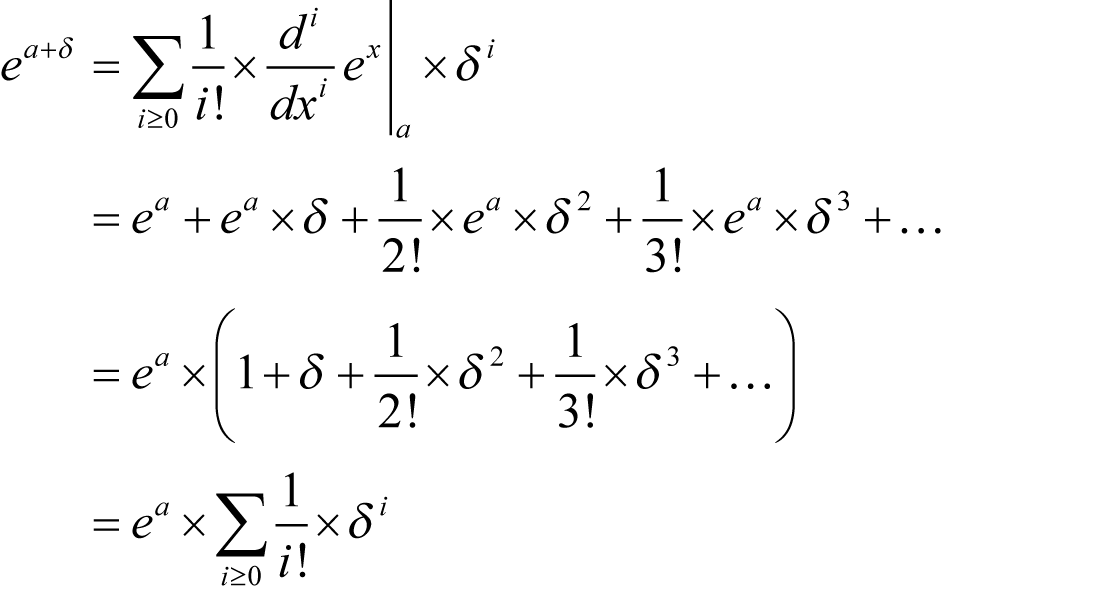

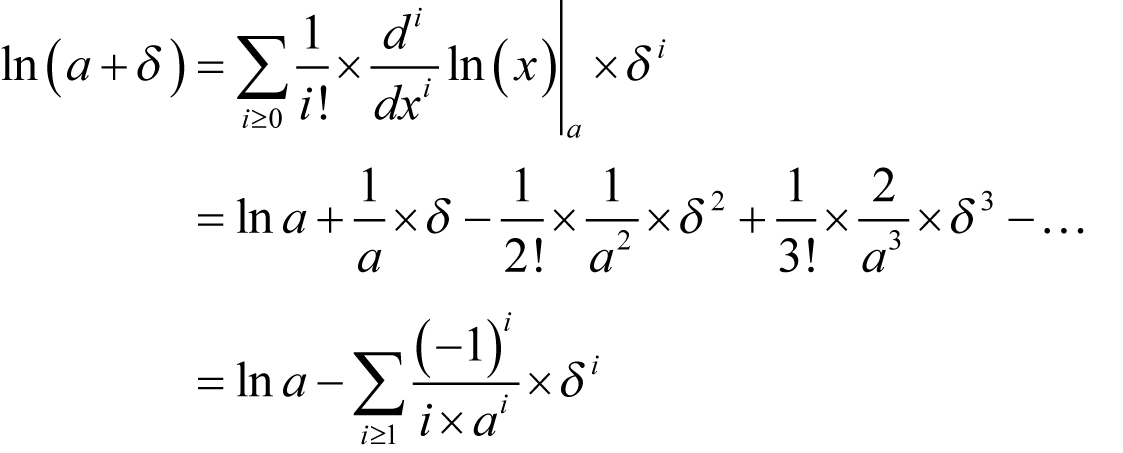

The first two functions simply require the first order Taylor’s series expansions

as illustrated in listing 7.

surreal

exp(const surreal &w)

{

const double exp_a = exp(w.real());

return surreal(exp_a, exp_a*w.inf());

}

surreal

log(const surreal &w)

{

return surreal(log(w.real()),

w.inf()/w.real());

}

|

| Listing 7 |

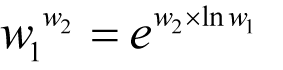

The third is a little less obvious, but we can use the same trick we used for our expression objects and rewrite it as

as implemented in listing 8.

surreal

pow(const surreal &w1, const surreal &w2)

{

return exp(w2*log(w1));

}

|

| Listing 8 |

The remaining arithmetic operations are left as an exercise for the reader.

Higher order derivatives

An immediately obvious problem with our

surreal

type is that, as it stands, we cannot use it to compute second and higher order derivatives.

We might be tempted to change the

b_ member

from a

double

to a

surreal

, allowing us to recover the second derivative from the infinitesimal part of the infinitesimal part.

However, as we try to compute higher and higher derivatives we will inevitably be forced into using complex recursive template trickery which is something I specifically wanted to avoid.

Fortunately, there’s a better way which, as I’m sure you will already suspect, is based on Taylor’s theorem.

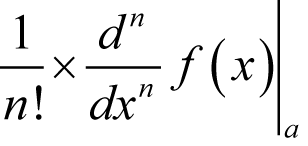

Note that the coefficient of δ n in the Taylor series expansion of a function f about a value a is

If we therefore keep track of the first n +1 terms of the Taylor series rather than just the first two we can recover the n th derivative by multiplying the coefficient of δ n by n !.

The first change we need to make to our

surreal

type is to store an array of coefficients rather than just two, as illustrated in listing 9.

template<size_t n>

class surreal

{

public:

typedef boost::array<double, n+1> coeffs_type;

surreal();

surreal(double a);

surreal(double a, double b);

explicit surreal(const coeffs_type &coeffs);

double coeff(size_t i) const;

const coeffs_type &coeffs() const;

int compare(const surreal &w) const;

surreal & negate();

surreal & operator+=(const surreal &w);

surreal & operator-=(const surreal &w);

surreal & operator*=(const surreal &w);

surreal & operator/=(const surreal &w);

private:

coeffs_type coeffs_;

};

|

| Listing 9 |

Note that here n represents the order of the Taylor series rather than the number of terms and we must consequently use an array of length n +1.

This means that we might run into trouble if

n

is the maximum value of

size_t

so I shall admit one tiny bit of template trickery and specialise the class to protect against this, as shown in listing 10.

template<>

class surreal<size_t(-1)>

{

};

|

| Listing 10 |

We have kept our original constructors and have added one which initialises all of the coefficients. Their implementations are given in listing 11.

template<size_t n>

surreal<n>::surreal()

{

coeffs_.assign(0);

}

template<size_t n>

surreal<n>::surreal(const double a)

{

coeffs_.assign(0);

coeffs_[0] = a;

}

template<size_t n>

surreal<n>::surreal(const double a,

const double b)

{

coeffs_.assign(0);

coeffs_[0] = a;

coeffs_.at(1) = b;

}

template<size_t n>

surreal<n>::surreal(const coeffs_type &coeffs)

: coeffs_(coeffs)

{

}

|

| Listing 11 |

Note that we shall now access the coefficients by their ordinal position in the Taylor series and that we shall return a NaN rather than throw when reading past the end of the coefficients as shown in the definition of

coeff

in listing 12.

template<size_t n>

double

surreal<n>::coeff(const size_t i) const

{

if(i>n) return

std::numeric_limits<double>::quiet_NaN();

return coeffs_[i];

}

template<size_t n>

const typename surreal<n>::coeffs_type &

surreal<n>::coeffs() const

{

return coeffs_;

}

|

| Listing 12 |

This might seem a little contrary to the C++ way of doing things but the reason for this design choice should become clear a little later on.

Next up is the

compare

member function which operates in much the same way as

std::lexicographical_compare

, implemented in listing 13.

template<size_t n>

int

surreal<n>::compare(const surreal &w) const

{

size_t i=0;

while(i!=n && coeffs_[i]==w.coeffs_[i]) ++i;

if(coeffs_[i]<w.coeffs_[i]) return -1;

if(coeffs_[i]>w.coeffs_[i]) return 1;

return 0;

}

|

| Listing 13 |

If you think this looks like I’ve made the beginners’ mistake of reading past the end of the coefficients array, don’t forget that it has n +1 elements.

The negation, addition and subtraction operators are hardly more complex than the original versions, as shown in listing 14.

template<size_t n>

surreal<n> &

surreal<n>::negate()

{

for(size_t i=0;i<=n;++i)

coeffs_[i] = -coeffs_[i];

return *this;

}

template<size_t n>

surreal<n> &

surreal<n>::operator+=(const surreal &w)

{

for(size_t i=0;i<=n;++i)

coeffs_[i] += w.coeffs_[i];

return *this;

}

template<size_t n>

surreal<n> &

surreal<n>::operator-=(const surreal &w)

{

for(size_t i=0;i<=n;++i)

coeffs_[i] -= w.coeffs_[i];

return *this;

}

|

| Listing 14 |

Unfortunately we have now effectively introduced a branch which may harm performance. However, since the bounds of the loop counter are known at compile time an optimising compiler has an opportunity to unroll the loops for small n .

Generalised surreal multiplication

Multiplication is a slightly more complicated proposition since there may be several pairs of terms from the left and right hand side that yield a given order when multiplied.

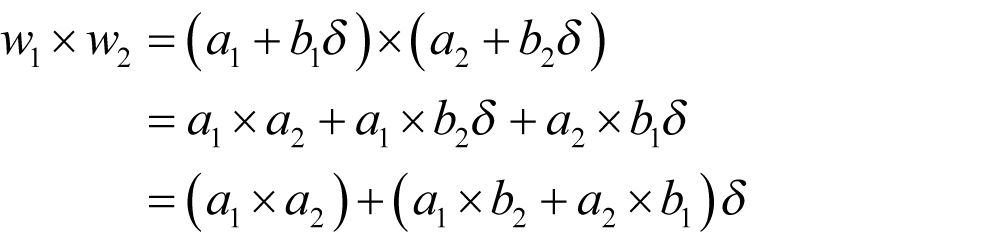

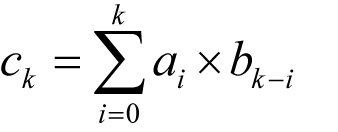

The process for multiplying polynomials is known as a discrete convolution in which each coefficient in the result is equal to the sum

where a i and b i are the coefficients of the i th order terms of the arguments and c i is the same of the result.

This is much like the algorithm we used to multiply bignums, except without the carry, and as was the case then efficient algorithms exist that use the Fast Fourier Transform.

We shan’t go to such trouble, but we shall at least arrange to apply the process in-place by iterating backwards over the coefficients of the result, as shown in listing 15.

template<size_t n>

surreal<n> &

surreal<n>::operator*=(const surreal &w)

{

for(size_t i=0;i<=n;++i)

{

coeffs_[n-i] *= w.coeffs_[0];

for(size_t j=1;j<=n-i;++j)

{

coeffs_[n-i] += coeffs_[n-i-j]

* w.coeffs_[j];

}

}

return *this;

}

|

| Listing 15 |

Note that we can only get away with this because we are discarding all terms above n th order.

Generalised surreal division

As was the case for our first order

surreal

type, division is trickier still. Once again we shall multiply the numerator by the reciprocal of the denominator, but we shall need to work out the Taylor series for the reciprocal to

n

th

order.

Fortunately, as will often prove the case for arithmetic operations, this isn’t actually too difficult.

Now, we have the further complication that there may be several terms with non-zero powers of δ in w . Fortunately, it doesn’t actually matter since their sum is still an infinitesimal and can consequently take the place of the δ in any Taylor series expansion.

The easiest way to do this is to use a

surreal

δ

equal to the infinitesimal part of

w

and repeatedly multiply 1 by it to generate its integer powers, as illustrated in listing 16.

template<size_t n>

surreal<n> &

surreal<n>::operator/=(const surreal &w)

{

const double y = w.coeffs_[0];

double si = 1.0;

surreal rw(1.0/y);

surreal di(1.0);

surreal d(w);

d[0] = 0.0;

for(size_t i=1;i<=n;++i)

{

si = -si;

const double yi = si * pow(y, double(i+1));

di *= d;

for(size_t j=i;j<=n;++j)

rw.coeffs_[j] += di.coeffs_[j] / yi;

}

return *this *= rw;

}

|

| Listing 16 |

Note that the inner loop needn’t use coefficients of

di

of order less than

i

since they must be zero after having multiplied by the 0 valued 0

th

order coefficient of

d

.

This suggests an optimisation in which we replace the multiplicative update step for

di

with one which doesn’t update coefficients of order less than

i

or use coefficients of order less than

i-1

, as shown in listing 17.

namespace impl

{

template<size_t size>

void

update_di(const boost::array<double, size> &d,

const size_t i,

boost::array<double, size> &di)

{

static const size_t n = size-1;

for(size_t j=0;j<=n-i;++j)

{

di[n-j] = 0.0;

for(size_t k=1;k<=n+1-(i+j);++k)

di[n-j] += di[n-(j+k)] * d[k];

}

}

}

|

| Listing 17 |

Note that there are no safeguards against calling this helper function with invalid values for

size

and/or

i

. Instead we shall rely upon the convention that

impl

should be read as ‘enter at your own peril’.

That caveat out of the way, we can replace the multiplicative update step with a call to this function as shown in listing 18.

template<size_t n>

surreal<n> &

surreal<n>::operator/=(const surreal &w)

{

const double y = w.coeffs_[0];

surreal rw(1.0/y);

double si = -1.0;

surreal di(w);

const double y1 = -y*y;

for(size_t j=1;j<=n;++j)

rw.coeffs_[j] += di.coeffs_[j] / y1;

for(size_t i=2;i<=n;++i)

{

si = -si;

const double yi = si * pow(y, double(i+1));

impl::update_di(w.coeffs_, i, di.coeffs_);

for(size_t j=i;j<=n;++j)

rw.coeffs_[j] += di.coeffs_[j] / yi;

}

return *this *= rw;

}

|

| Listing 18 |

Generalised arithmetic operations

As before, reciprocation shines a light on how we might go about implementing other arithmetic operations and, once again, we shall choose as examples

We shan’t bother with the power this time since, as we have already seen, we can implement it in terms of these two.

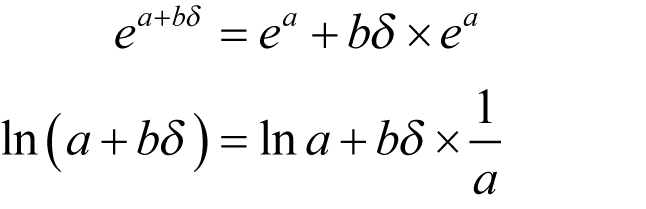

The first step is, of course, to work out the Taylor series expansions of these functions. Fortunately this isn’t very difficult; the Taylor series of the exponential is given by

and that of the logarithm by

With these formulae to hand, implementing the exponential and logarithmic functions is but a simple matter of programming, as shown in listings 19 and 20.

template<size_t n>

surreal<n>

exp(const surreal<n> &w)

{

double yi = 1.0;

boost::array<double, n+1> di = w.coeffs();

boost::array<double, n+1> ew = di;

ew[0] = 1.0;

for(size_t i=2;i<=n;++i)

{

yi *= double(i);

impl::update_di(w.coeffs(), i, di);

for(size_t j=i;j<=n;++j)

ew[j] += di[j] / yi;

}

const double y = exp(w.coeff(0));

for(size_t i=0;i<=n;++i) ew[i] *= y;

return surreal<n>(ew);

}

|

| Listing 19 |

template<size_t n>

surreal<n>

log(const surreal<n> &w)

{

const double y = w.coeff(0);

double si = 1.0;

boost::array<double, n+1> di = w.coeffs();

boost::array<double, n+1> lnw = {{log(y)}};

for(size_t j=1;j<=n;++j) lnw[j] += di[j] / y;

for(size_t i=2;i<=n;++i)

{

si = -si;

const double yi =

si * double(i) * pow(y, double(i));

impl::update_di(w.coeffs(), i, di);

for(size_t j=i;j<=n;++j)

lnw[j] += di[j] / yi;

}

return surreal<n>(lnw);

}

|

| Listing 20 |

Now we are nearly done, but there’s one last use of the

surreal

type that I’d like to cover before we conclude.

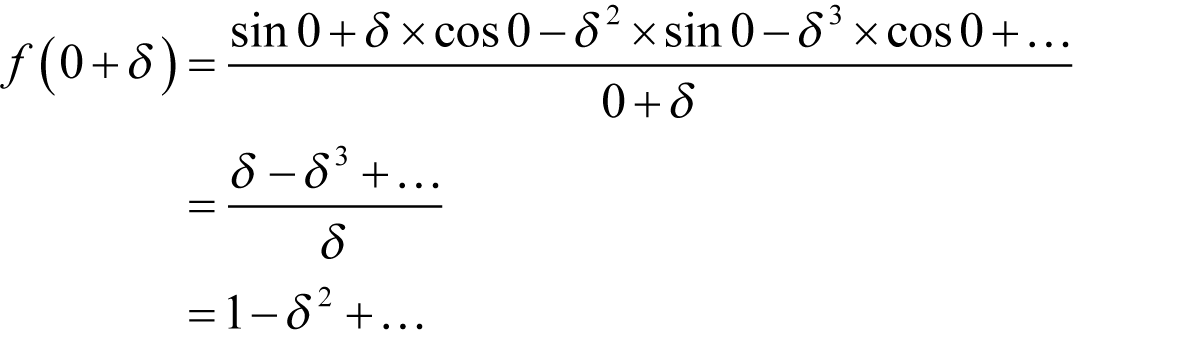

L’Hôpital’s rule

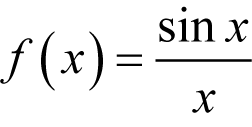

Consider the function

What is its value when x is equal to 0?

On the face of it that would seem to be a meaningless question. Certainly calculating it using floating point will yield a NaN.

However, it is in fact 1.

This rather surprising result can be demonstrated using, you guessed it, Taylor’s theorem!

The Taylor series expansions of the numerator and denominator are

Setting x equal to zero gives

Note that if the first order terms of the numerator and denominator were also zero we could continue on to the second order terms in the same fashion.

Since our surreal type keeps track of the coefficients of the Taylor series we can easily extend division to exploit L’Hôpital’s rule, allowing us to correctly evaluate such ratios.

We shall do this by first finding the lowest order for which the coefficient in the Taylor series of either the numerator or denominator is non-zero. We shall then copy the coefficients of the denominator from that point on into the lowest order coefficients of our δ , leaving the higher order terms equal to zero.

Finally we shall shift the coefficients in the numerator to the left and fill the higher order terms with NaNs before multiplying by the reciprocal, as shown in listing 21.

template<size_t n>

surreal<n> &

surreal<n>::operator/=(const surreal &w)

{

static const double nan =

std::numeric_limits<double>::quiet_NaN();

size_t off=0;

while(coeffs_[off]==0.0 && w.coeffs_[off]==

0.0 && off!=n) ++off;

const double y = w.coeffs_[off];

surreal rw(1.0/y);

double si = -1.0;

surreal d, di;

for(size_t i=0;i<=n-off;++i) d[i]

= di[i] = w[i+off];

const double y1 = -y*y;

for(size_t j=1;j<=n-off;++j) rw.coeffs_[j]

+= di.coeffs_[j] / y1;

for(size_t i=2;i<=n-off;++i)

{

si = -si;

const double yi = si * pow(y, double(i+1));

impl::update_di(d.coeffs_, i, di.coeffs_);

for(size_t j=i;j<=n;++j) rw.coeffs_[j]

+= di.coeffs_[j] / yi;

}

if(off!=0)

{

for(size_t i=0;i<=off;++i)

coeffs_[i] = coeffs_[i+off];

for(size_t i=off+1;i<=n;++i) coeffs_[i] = nan;

}

return *this *= rw;

}

|

| Listing 21 |

This final padding with NaNs is the reason why we could leave zeros in our δ ; it doesn’t matter what value they have since any terms they effect will eventually be multiplied by NaN.

We might improve the efficiency of division by making

update_di

and the final multiplication aware of the truncation of the terms, but since this is likely to be a relatively rare occurrence it hardly seems worth it.

Now, at last, I can justify the design choice of returning NaNs when reading past the end of the coefficients rather than throwing exceptions. The application of L’Hôpital’s rule means that we have less information for computing coefficients, so some high order coefficients in the array may be undefined and, in floating point arithmetic, undefined is spelt NaN.

Making a distinction between coefficients undefined because we didn’t choose a high enough order

surreal

and coefficients undefined because of L’Hôpital’s rule doesn’t make a great deal of sense to me; both are a consequence of a lack of information

1

.

One change that will almost certainly be necessary is to replace the equality comparisons when computing

off

with one that checks whether the terms are very small.

Unfortunately the meaning of very small is rather determined by the problem at hand; it needs to be relative to the order of magnitude of the variables in the calculation.

We should therefore need to add some facility for the user to provide this information, preferably as a template argument so that we cannot perform arithmetic with numbers that disagree on the matter. Since we cannot use floating point values as template arguments we should probably have to content ourselves with an integer representing a power of 10.

The Holy Grail?

So we finally have an algorithm for computer differentiation that is as accurate as possible without a computer algebra system and parsimonious with memory to boot.

I must admit that I have something of a soft spot for this approach having independently discovered it a few years ago. Even though I subsequently discovered that I wasn’t the first, I still find it to be more satisfying than symbolic differentiation and it is my weapon of choice for difficult, by which I mean extremely lengthy, differentiation calculations.

Cancellation error

Unfortunately automatic differentiation suffers from cancellation error in the derivative just as much as symbolic differentiation does. Indeed, it is doubly cursed since, unlike symbolic differentiation, we couldn’t automatically manipulate the form of the calculation to try to reduce its effect even if we wanted to, although we could at least again use interval arithmetic to monitor its effect.

Numerical approximation

As with symbolic differentiation we must still take care when combining automatic differentiation with numerical approximation; the derivative of an approximation of a function may not be a good approximation of the derivative of that function.

The solution is more or less the same as that we used for expression objects; we must simply compute the derivatives explicitly and use them to calculate the coefficients of the function’s Taylor series.

As with expression objects we can do this either using a general purpose algorithm such as the polynomial approximation, or with a specific approximation of the derivative of the function, or, if we’re very fortunate, with the actual formula for the derivative.

Clearly this is no better or no worse than taking the same approach with expression objects, but somehow it seems a more natural union to me. With automatic differentiation we are not trying to maintain an exact representation of the mathematical expression so resorting to approximation doesn’t seem quite so jarring.

Although, as I have already admitted, I am a little biased.

In conclusion, I declare that automatic differentiation is a rock star duck; hardly perfect, but damn cool!

References and further reading

[Knuth74] Knuth, D., Surreal Numbers; How Two Ex-Students Turned on to Pure Mathematics and Found Total Happiness , Addison-Wesley, 1974.

[Ridders82] Ridders, C.J.F., Advances in Engineering Software , Volume 4, Number 2., Elsevier, 1982.

[Robinson74] Robinson, A., Non-Standard Analysis , Elsevier, 1974.