Visual Studio’s Test Explorer allows native C++ tests to be run under a managed wrapper. Malcolm Noyes takes it a step further using Catch to drive the tests.

Recently I adapted Phil Nash’s Catch C++ (and Objective C) testing framework [ Nash ] to integrate with Visual Studio (VS). I’ve made a fork of Catch available on Github [ Catch ], together with some documentation that explains how to use it in that environment [ VS ]. I thought perhaps that for ACCU it would be more interesting if I wrote up some details about why it does what it does.

For those who are unfamiliar with C++ testing in Visual Studio...

The five minute guide to testing in Visual Studio

First, I should define some terms that I’ll be using. A ‘Managed’ C++ test is one that runs native C++ code (the code we want tested) under a managed C++ wrapper (that creates the test environment). Until VS2012 this was the only kind of C++ unit test that you could write that integrated with the Visual Studio IDE. With VS2012, Microsoft added ‘Native’ C++ tests. These are tests that use a native C++ wrapper to create tests but that can still be run from the Visual Studio IDE.

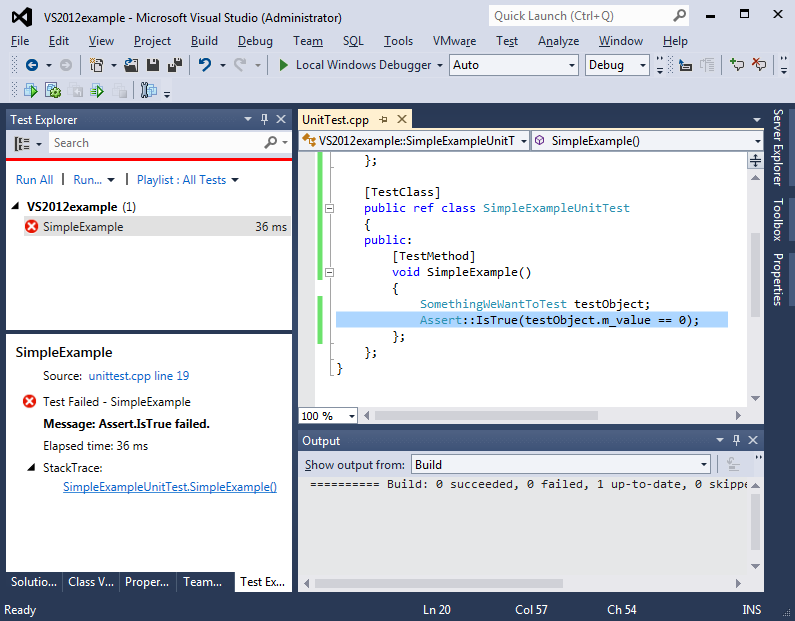

Figure 1 shows an example of a ‘Managed’ test in VS2012. The IDE has the Test Explorer to the left showing that the test has failed. Clicking the highlight at the top of the stack trace (bottom left) opens the code and positions the cursor at the failing line (I’ve manually highlighted the line to make this clearer...).

|

| Figure 1 |

This is what I wanted to replicate with Catch...so for those who are unfamiliar with Catch....

The five minute guide to Catch

Catch is a C++ testing framework that is simple to get running (header only, no dependencies) and in the case of failure (or optionally for success) can also provide both the original expression and the values that caused failure. The current version is designed to run from the command line.

To make the command line work, Catch needs a

main()

function; my personal convention is to create a main.cpp with this content:

// main.cpp #define CATCH_CONFIG_MAIN #include "catch.hpp"

Then I create a file for my tests (this file can be shared with Visual Studio) and write a test (see Listing 1).

// example_tests.cpp

#include "catch.hpp"

struct SomethingWeWantToTest {

SomethingWeWantToTest() : m_value(1) {}

int m_value;

};

TEST_CASE("Simple example") {

SomethingWeWantToTest testObject;

SECTION("First section, fails") {

REQUIRE(testObject.m_value == 0);

}

SECTION("Second section, works") {

REQUIRE(testObject.m_value == 1);

}

}

|

| Listing 1 |

The ‘test case’ is defined with free form text for the name, then creates an instance of the object that we want tested. When the tests are run, Catch will loop through the

TEST_CASE

for as many

SECTION

s as are defined, so in this example the

TEST_CASE

gets run twice. This creates a new, initialised

testObject

each time the

TEST_CASE

is run; for this reason many Catch tests require no

setup()

or

teardown()

methods.

The first

SECTION

will clearly fail, but Catch carries on and runs the

TEST_CASE

again to run the second

SECTION

, which works. The output from a test run with default arguments is like Listing 2.

test.exe is a Catch v1.0 b23 host application. Run with -? for options ------------------------------------------------- Simple example First section, fails ------------------------------------------------- c:/Projects/examples/example_tests.cpp:8 ................................................. c:/Projects/examples/example_tests.cpp:12: FAILED: REQUIRE( testObject.m_value == 0 ) with expansion: 1 == 0 ================================================= 1 test case - failed (2 assertions - 1 failed) |

| Listing 2 |

For the failure, the program outputs both the original expression and the values that caused the failure. The final line confirms that the assertion in each

SECTION

was executed, with 1 failure. Catch can also be run to show the output from successful

REQUIRE

ments, along with many other options.

Goals using Catch in Visual Studio

My initial goal was purely selfish; I currently work in an environment where VS is used to implement tests using Microsoft’s test framework. The environment has a couple of serious usability problems [ MSTest ] and this makes testing a somewhat painful experience.

My involvement started from Visual Studio 2010 and later moved on to VS2012. From a fairly early stage I had written some macros that enabled me to share source code between Catch and MSTest but this didn’t integrate very well with the IDE, then I discovered by accident that VS2012 had some ‘Native’ C++ unit test support and I realised that it should be possible to hook into this directly from Catch. This started a train of thought that made me wonder if I could do the same thing for Managed tests too.

Initially I wanted:

- To be able to write a test with Catch macros and share source code between command line Catch and VS.

- If an assertion failed in the IDE, the test should stop and the IDE should allow me to jump to the location of the problem, just like it does in MSTest.

This last requirement is slightly different from running regular Catch from the command line; normally we expect that Catch will do its best to run as many of the tests as possible, then report all of the problems at the end of the run. In the IDE, I wanted it to stop and that meant that I had to tinker with some of Catch’s internals...

First implementation

So I spent a couple of days doing an experiment and ended up with something that seemed to meet these goals. To make it work, I had to do three things:

-

Redefine the

TEST_CASEmacro. - Rewrite the reporter so that it reported at the end of the test.

- Hook up the assertion to the relevant MSTest mechanism.

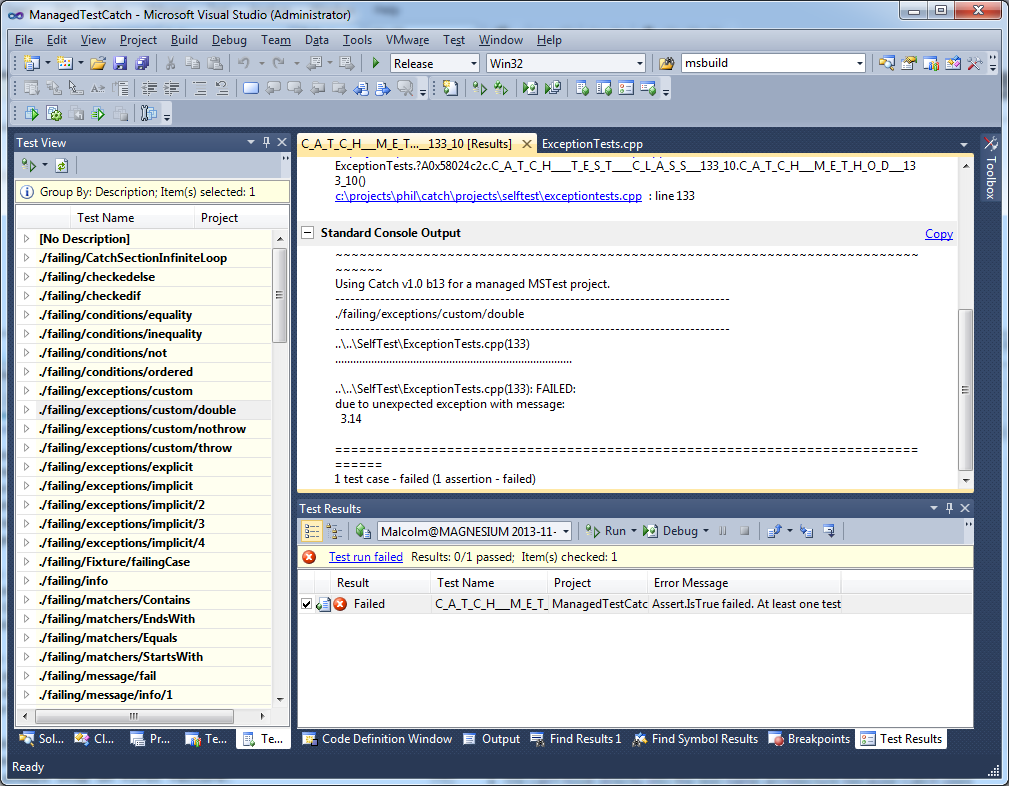

The result looks like Figure 2 (VS2010).

|

| Figure 2 |

Changes to the TEST_CASE macro

The

TEST_CASE

macro maps to an underlying

INTERNAL_CATCH_TESTCASE

macro that needed a completely new definition, along with the equivalent macros for testing class methods that are very similar.

My first version of these macros implemented an instance of a test class (either a ‘Managed’ test class or a ‘Native’ test class), provided a semi-unique name for a function that would get invoked and then called it (more about the ‘semi’-uniqueness later...). As in regular Catch, the definition of the invoked

TEST_CASE

function follows the macro and is written by the user. The Catch ‘test name’ is passed as a property attribute to the test class so that it can be displayed in the IDE. In the first version, the Catch ‘description’ field was discarded.

// TEST_CASE maps to INTERNAL_CATCH_TESTCASE

// First param is test name

// Second param is 'description',

// often used for tags

TEST_CASE( "./failing/exceptions/double",

"[.][failing]" )

{

//...

}

Since Catch is header only, I also had to adapt the code to allow for functions that would be

#include

d multiple times; Regular Catch ‘knows’ which module contains

main()

so only includes certain headers in that module. Using an MSTest project in Visual Studio doesn’t require a

main()

function, so

catch.hpp

needs to pull in everything in each module. Consequently, some functions needed to get inlined and a couple of static members needed to get replaced by templates so that the compiler/linker had to work out how to keep just one instance from all modules.

Changes to the reporter

The first reporter looked very similar to the Catch ‘console’ reporter. The main change was to collect together all the information that needed to be reported when the test completed so that it could be sent to the output windows in VS....

...except that Phil had optimised a couple of functions that returned strings using a static

std::string

. It turns out that Managed C++ doesn’t like this much (‘This function must be called in the default domain’ in atexit, presumably as a result of trying to release the memory from the

std::string

). So (for now) those functions had to return a new string each time. Ho hum...

Assertions

Catch has a number of macros that check for failures (

REQUIRE

,

CHECK

,

REQUIRE_FALSE

, etc) but underlying all of this is an

INTERNAL_CATCH_ACCEPT_EXPR

macro that helpfully throws an exception if the test needs to abort. All I had to do to make this work was call the MSTest assertion with the relevant details of the failure instead of throwing. That would give me the context that I wanted so that the IDE could point me to the problem by clicking the link.

Some feature creep

Catch already has an extensive internal self test suite that is slightly complicated because, being a test framework, needs to check failures as well as tests that pass. I had decided that the best way to check that my code worked would be to build a project that used all of the internal test suite and one of the first modules I encountered was the VariadicMacrosTests file.

I had already started to prepare a blog post about what I had done and as part of that I created a new VS test project from scratch, using the VS wizard. However I noticed that the wizard generated a project that used Unicode by default. I wanted to be able to use multi-byte strings (MBCS) as well. ‘Simple’, you may think and indeed it was until I encountered the variadic macros in Catch. The problem with this is that some of the strings needed to be passed as wide strings (e.g. to test class attributes) and some didn’t (e.g. to be used internally by Catch). When it isn’t known how many parameters have been passed to the macro, it can become tricky to know how to convert a possibly non-existent value! Much of the complexity in my implementation of the

TEST_CASE

macros is there to deal with this problem.

I also discovered that Native C++ tests didn’t want to play nicely with anonymous namespaces. I tried several possible ways to fool the compiler into allowing a unique class name to be used but in the end I had to accept that each test needed to go into a namespace uniquely named for each file. If I didn’t do this, there was a risk that the semi-unique name generated by the

TEST_CASE

macro would cause a name clash between modules.

Sadly if there is a duplicate name, VS silently ignores one of them and only runs one of the tests, so I had to manually check for a name clash and generate an error if it happened. The workaround for both these problems is pretty simple; since neither Catch or VS cares what namespace the tests are in,

TEST_CASE

s in a module should go into a namespace named after that module, e.g.:

// module1.cpp

namespace module1 {

TEST_CASE("blah") {

//...

}

}

Remarkably, most other things just worked.... but I had some trouble with Catch’s ability to register and translate unknown exceptions...

More feature creep

Although I suspected that the feature wasn’t used much, I was sure that it should be possible to implement some code that would allow me to translate unknown exceptions. I realised that to make it work I would have to fix up Catch’s static registration. The existing macros worked like this; first define a translation:

CATCH_TRANSLATE_EXCEPTION( double& ex )

{

return Catch::toString( ex );

}

then when a test throws an unexpected exception, it should be sent to the output, e.g.:

TEST_CASE( "Unexpected exceptions can be

translated", "[.][failing]" )

{

if( Catch::isTrue( true ) )

throw double( 3.14 );

}

will send this to the output:

...

c:\projects\catch\projects\selftest\exception tests.cpp(130): FAILED:

due to unexpected exception with message:

3.14

In common with many other test frameworks, Catch has a global registration object that it uses to register tests, and it also uses this to register reporters and exception translators, so initially I just implemented the exception translators to use a static templated object.

Once I’d done that I could run each individual test from the self test suite in the IDE and manually check that the output matched the output from Catch.

A fortunate co-incidence

I had been thinking about one of the other tests in the test suite that I hadn’t managed to implement. The code collected all the registered tests, worked out whether they were expected to ‘pass’ or ‘fail’ and then ran them all in two batches, one for passes and one for failures. Around this time, Phil had written a Python script that verified the expected output from running all tests, so he removed this code. I had a feeling this code might be useful, and so it prompted me to think about how I could perhaps use a similar technique to run all the tests automatically in VS. My idea was to run all the tests in a ‘batch’, then use a similar Python script to generate compatible output from the VS output, instead of having to manually check every time.

However, in that version I didn’t register any tests; my initial implementation of

TEST_CASE

just created a test class and ran the method inline, so didn’t need it. After a little tinkering with a few angle brackets, I discovered that I could indeed implement the whole of Catch’s registration mechanism in VS. Then I started to wonder what else I could do with it...

A new goal!

I started with a new macro that didn’t register a catch test case but instead asked the Catch registration object to run all the tests. That worked but I knew that Phil was able to use Catch’s ‘tag’ filtering to selectively run different groups of tests and I wanted to be able to do the same thing. I also realised that if I could somehow call this ‘batch test’ using the VS command line tools then I would be able to easily integrate tests written for Catch into other Continuous Integration environments, such as TeamCity, TFS or Jenkins.

During my first encounter with Native C++ tests I had discovered that VS2012 Native tests could not use MSTest.exe to run them from the command line. Instead it seemed that MSTest.exe had been deprecated in favour of vstest.console.exe that had the necessary plumbing to understand binaries built for native tests and run them. A brief look at the command line parameters for both tools suggested the options for passing filters into tests was going to be limited to a single textual parameter (‘Category’ for MSTest and, somewhat bizarrely, ‘Owner’ for Native vstest.console.exe).

I did explore the possibility of feeding parameters into the tests as a database but that seemed overly convoluted and it wasn’t clear if it would work in Native C++ tests (I don’t think it does...) so I ended up with a macro whereby I could specify an identifier that would be recognised by the VS tools and that I could ‘map’ to Catch ‘tags’ to provide filtering using the existing Catch code, the snappily named

CATCH_MAP_CATEGORY_TO_TAG

:

CATCH_MAP_CATEGORY_TO_TAG(all, "~[vs]");

This runs all the registered tests except those tagged ‘[vs]’, which corresponds to the default run of Catch on the command line. This macro also changes the default behaviour of Catch so that instead of stopping immediately (as we need for the IDE) it runs as many tests as possible. Sadly, this change in behaviour also exposed some shortcomings in my implementation of the reporter and capture mechanisms; in some circumstances expected output would be lost. This required a bit more rework of Catch’s internals, in particular I found that I needed to push the current test state onto the stack so that the reporter could use that information when a failing test was unwound.

Then I developed some new Python scripts that parsed and verified the Catch output and compared that against the output from MSTest.exe/vstest.console.exe (output from these tools can be directed to a .trx file). Aside from some minor presentation differences, this worked well and was good enough to validate that the VS code is doing the right thing, at least for ‘all’ tests.

The final section

There were still two things that I couldn’t validate though; the Catch self test validation script runs a set of tests that shows output from successful tests, and another that aborts after 4 failures. All these things can be easily changed using different parameters from the Catch command line. Could I replicate this somehow? What I wanted was to be able to define test parameters before I used

CATCH_MAP_CATEGORY_TO_TAG

. Such changes should apply to the current batch test run only; subsequent test runs should revert to defaults. A few additional macros and some additional configuration classes allowed me to do this too:

CATCH_CONFIG_SHOW_SUCCESS(true) CATCH_CONFIG_WARN_MISSING_ASSERTIONS(true) CATCH_MAP_CATEGORY_TO_TAG(allSucceeding, "~[vs]");

This produced all the correct output, but in the wrong order. The order that the tests are run depends on their order of registration with the global registrar, which of course depends on the order the compiler/linker decides to implement static objects. Phil normally uses OSX to develop Catch, and this does things in a different order from VS. The solution is to sort the output by test name in the validation scripts before it can be compared.

The same problem afflicts the test that aborts after 4 failures, but the effect is slightly different. The code for OSX was presumably written using XCode/Clang and the tests that code decides to execute before it gets to 4 failures was different to VS and I got a completely different set of failures! So finally I had to add two more macros; one that ‘registers’ a test to be run in a specific order and one that runs the ordered list of tests (Listing 3).

CATCH_INTERNAL_CONFIG_ADD_TEST("Some simple comparisons between doubles")

CATCH_INTERNAL_CONFIG_ADD_TEST("Approximate comparisons with different epsilons")

CATCH_INTERNAL_CONFIG_ADD_TEST("Approximate comparisons with floats")

...

INTERNAL_CATCH_MAP_CATEGORY_TO_LIST(allSucceedingAborting);

|

| Listing 3 |

Wrap up

I now have a very flexible test environment that I can use to share Catch source code between Visual Studio and command line Catch. If I want to avoid the torture of the Test Explorer, I can run Catch from the command line by simply adding a

main.cpp

that specifies

CATCH_CONFIG_MAIN

. For those times when I need to resort to the debugger, I can easily run individual tests in the IDE. As a bonus, I can also specify a ‘batch run’ that uses the built in VS command line tools, which means that integration with TeamCity (or other CI environments) should be easy. I think my goals have been met; if you are suffering from similar frustrations, please give the fork a try and let me know what works, and what doesn’t.

Finally, I’ve been discussing with Phil the possibility that the code for my fork could be merged back into the mainline; my understanding is that he is keen to do this, although as far as I know he hasn’t had time to take a good look at what I’ve done to his code yet! So I hope that this will happen, or perhaps will have happened by the time you read this...

References

[Catch] My fork of Catch: https://github.com/colonelsammy/Catch

[Nash] Phil Nash’s Catch framework: https://github.com/philsquared/Catch

[VS] Documentation for VS integration: https://github.com/colonelsammy/Catch/blob/master/docs/vs/vs-index.md

[MSTest] http://www.graoil.co.uk/blog/2013/10/28/replacing-mstest-with-phil-nashs-catch-framework-for-managed-tests/