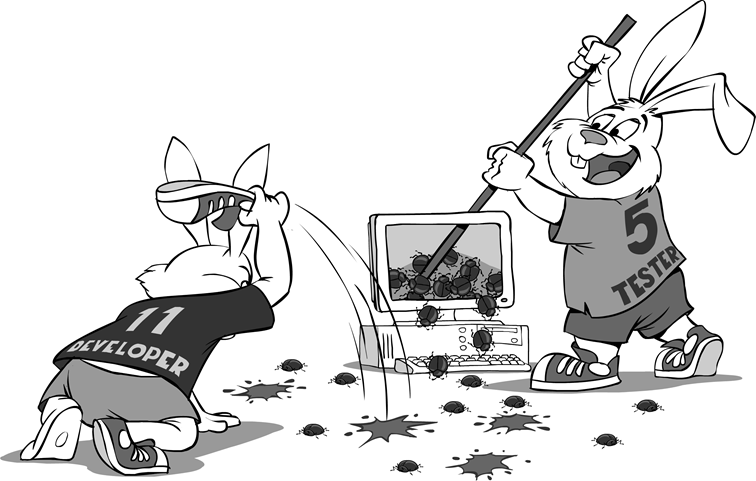

Software development needs a team of supporting players. Sergey Ignatchenko takes a look at the role of professional testers.

The superfluous man (Russian: лишний человек, lishniy chelovek) is an 1840s and 1850s Russian literary concept derived from the Byronic hero. It refers to an individual, perhaps talented and capable, who does not fit into social norms.

~ Wikipedia

Disclaimer: as usual, opinions within this article are those of ‘No Bugs’ Bunny, and do not necessarily coincide with the opinions of the translator or Overload editors; please also keep in mind that translation difficulties from Lapine (like those described in [ LoganBerry04 ]) might have prevented from providing an exact translation. In addition, both the translator and Overload expressly disclaim all responsibility from any action or inaction resulting from reading this article.

This article intends to open a mini-series on the people who’re often seen as ‘superfluous’ either by management or by developers (and often by both); this includes, but is not limited to, such people as testers, UX (User eXperience) specialists, and BA (Business Analysts). However, in practice, they are very useful – that is, if you can find a good person for the job (which admittedly can be difficult). This article tries to discuss the role of testers in the development flow.

Hey, we already have test-driven development, testers are so XX century!

Assumption is a mother of all screw-ups

~ Wethern’s Law of Suspended Judgment

When raising a question about testers in modern developer circles, frequently the first reaction is surprise. As a next step, if developers are kind enough not to take me by my ears and throw me out immediately, they start to explain all the benefits of automated unit testing and/or test-driven development. I can assure all the readers that I know all the benefits of these concepts and sometimes I even use them myself.

Still, even if you have test-driven development (which is often a good thing, there is no argument about it), it still doesn’t mean you’ve got a silver bullet which guarantees that you have zero bugs. Moreover, there are at least four really important reasons why we cannot expect that such tests can possibly cover the whole space of potential bugs.

First of all, we need to mention that usually, the most hard-to-find bugs are integration bugs. How often do you face the following situation: “Both modules are working fine separately, but together they fail; to make things worse, they fail only in 20% of common use cases.”? I’m willing to bet all the carrots in the world that for any more-than-single-person-development such a situation is not just ‘common’, it is a thing which takes at least 50% of the system debugging time. In the theory of test-driven development, it should be a responsibility of whoever combined these two modules to write test cases for all possible interactions, but it never happens if modules are not-too-trivial. Some tests (often as little as one, to have something to run) are written, sure, but all of them? No way. Why this is the case can be somewhat explained by the next two reasons.

The second reason is related to psychology. The mindset of a developer is usually ‘to create something’, not ‘to break something’. It is especially true when you need to look for ways to break your own creation. ‘To create a test to demonstrate that your creation is working’ is one thing (and this is what happens in test-driven development), but ‘to look for creative ways to break your own creation’ is a very different story. Sometimes this second effect may be mitigated by having developer A write code and developer B write test cases for this code (and vice versa), but such a policy essentially means that each developer works as a half-time tester, that’s it.

The third reason is related to the observation that when higher-level more-complicated modules are being integrated, the developer who’s doing it simply does not have enough information to find all the relevant test cases. In practice, module documentation rarely goes beyond doxygen, and never ever describes all the relevant details (not that I’m saying it can be possibly done at all). It means that unless the developer who performs the integration wrote both modules being integrated, she doesn’t have sufficient information to write an exhaustive set of test cases (not to mention that doing it may be prohibitively expensive). In theory, one shouldn’t rely on anything which has not been tested in module unit tests, but even this is not realistic, and doesn’t guarantee against integration issues.

The fourth reason is related to the same developer at the same time having too much information about the program. Often the developer ‘knows’ how it should work, and assumes (!) that it does. Which inevitably leads to test cases being omitted. On the other hand, the developer often doesn’t know all the details about how the code is supposed to be used (and especially those scenarios which are not supposed to happen, but will arise in real life anyway).

In three out of these four cases listed above (#1,#2, and #4) a tester performing high-level testing has a clear advantage over a developer doing unit testing (there are other cases where unit tests have advantage over high-level testing, but this is beyond the scope now as we’re not arguing about unit testing being unnecessary). Based on the reasoning above, and on many years of experience, IMNSHO testers are necessary in most projects developed by more than 1 or 2 people.

With this in mind, we’ve already answered the question, “Do we need testers if we have test-driven development?” and now can proceed to the next ones: “What are we trying to achieve?”, “How to convince management that you need testers”, and “How to organize a useful testing team?” (even if ‘team’ is as small as 1 tester).

Goals of the testing team

Each team should have well-defined goals. The goal of a testing team is to improve product quality (some may argue that it is to ‘assure quality’, but as a zero-defect product is a non-existing beast, it is better to admit it and make it clear that team should actively look for bugs, rather than passively say, “Everything is ok”).

Improving product quality is a very broad task, and includes at least such things as (with more details on some of these tasks provided below, under ‘How to organize the testing team’):

- automated regression testing; sure, unit tests should be run by developers themselves, but integration testing should also include automated regression testing

- actively looking for ways to break the program. For example, one thing which a good tester usually does is to look for things like “Hey, what if a user presses this button now?”

- if applicable: monitoring end-user feedback and producing reproducible bug reports out of it

- if there is no UX team: reporting ‘usability defects’, also (if applicable) from end-user feedback

If you’re still sure that you (as developer) want to do all these things yourself – I give up. For the rest of you, let’s proceed to the next question: “How to convince management that you need testers”?

Testers from the management perspective

Admittedly, convincing management about testers can be really tough. After all, from an accounting perspective, testers are often interpreted as an expense, without producing anything tangible (developers are at least producing code, which can be seen as an asset, but testers, even good ones, don’t produce any assets). The same logic, however, can be extended to say that as code is an asset, a bug in a code (which reduces code quality and user experience) is a liability (in extreme cases – it can be literally a liability in a sense too [ Levy89 ] [ Techdirt11 ]). Therefore, while testers indeed do not produce assets, they do remove liabilities, which has a positive impact on the bottom line of the company (provided that the testers are good, but this stands for all kinds of employees).

Throwing in such an all-important thing as improved end-user satisfaction, this should be enough to convince all but the most-stubborn managers to admit that testers are useful; the problem of convincing them that testers are needed ‘right now’ (and not “yes, sure, maybe, some time later”) is left as an exercise for the reader.

How NOT to organize a testing team

At some point in my career, I was working for a really huge company (on the scale of “I’m not sure if there is anything larger out there”). There, the testing department for one of the projects consisted of several dozen people, who were given instructions like “press such and such button, you should be shown such and such screen, if it is not – report, if it is – go to the next step”. This is certainly one way not to organize your testing team.

How to organize a testing team

As usual, organizing the development process is more art than science, and this applies to testing teams in spades. However, there are some common observations which may help on the way:

-

never ever position testers as inferior to developers. First, they are not inferior (and if they are, you’ve done a poor job organizing the testing team). Second, it will hurt the process badly. Overall, it is usually better to position testers higher than developers (in a sense that developers should fix the bugs found by the testers instead of arguing that it is not a bug, but a feature); at the very least:

- opened bugs should be processed according to tester-specified severity and priority

- the process of closing bugs as WONTFIX shouldn’t be too easy for developers, and should involve discussions and/or management approval

- never ever think of testers as inferior developers. Think of them as of developers with a different mindset. As discussed above, there are things which developers cannot do for objective reasons.

- automated testing tools is are an absolute must. Situations when testers are just pressing buttons according to instructions must be avoided at all costs. Whenever a bug is found and fixed, a test case must be included into a standard regression test set.

- whenever possible, consider writing automated self-testing tools at non-UI level; for example, if your application is a network one, one way to test is not by pressing buttons in the UI client, but by making network requests instead. This may allow testing the system a bit differently (which is always good) and under much heavier stress (which is even better). Such automated self-testing tools are normally written by developers and used by testers.

- it should be clearly stated that normally it is a tester’s responsibility to make a bug reproducible (exceptions for intermittent bugs are possible, but they should stay as exceptions). Having irreproducible bugs in bug tracking is bad for several reasons, including the following two: first, it forces developer to do tester’s job (with most of the argument for having separate testing team applicable); second, it greatly reduces enthusiasm for developers to fix the problem.

- if a product is already released, at least some portion of testers’ time should be dedicated to go through user complaints, try to reproduce them and open bugs if bugs are confirmed. For a product with an active user forum, this can work wonders with regards to product quality. This approach risks starting to deal with singular user complaints (i.e. those which represent a problem only for one single user), but provided that user forum is active enough, simple filtering of “at least N complaints” usually does the trick (see also below about “we’ve already got two(!) complaints” approach – it did work in practice).

- one special area is ‘Usability defects’. Strictly speaking, it is better to delegate dealing with such defects to UX specialists (which represent another category of ‘Non-Superfluous People’ and will be hopefully be discussed in a separate article). However, if you do not have separate UX team (which you should, but probably don’t) – it should be a responsibility of testers to complain about ‘Usability defects’ (in other words, about ‘inconvenient/confusing UI’). At the very least, end-user complaints about ‘Usability defects’ should be taken really seriously and fixed whenever there are enough users complaining. After all, it is the end-user who’s ultimately paying for the development [ NoBugs11 ]. In one company with millions of users, I’ve seen a policy of “Hey, we already have two (!) complaints from end-users – we should do something about it in the next release”. Believe it or not, such a policy did result in the company making the best software in the field (and making money out of it too).

- having a testing team does not imply in any way that unit tests and/or test-driven development are not necessary. Ideally, testing teams should work only with stuff which has already been unit-tested and concentrate on (a) automated regression testing; and (b) not-so-obvious ways to break the program.

Hey, where to find good testers?

Unfortunately, finding good testers is a big problem. However, if you stop thinking about testers as inferior people, and search for them not as an afterthought, but in the same way as you’re looking for developers, it usually becomes possible. Yes, finding a good tester is not easy; however, finding a good developer is also not easy, but every successful team does it (otherwise it won’t be successful). So, try and find at least one good tester to complement your good developers – the improved quality of your product will almost certainly raise user satisfaction (whether it will raise company profits and developer salaries is a different story, but things such as marketing are beyond the scope of both this article and Overload in general).

Testing metrics

When you do have a testing team, the question arises: how to measure its performance? In practice, I’ve seen two approaches (which can be combined). The first one is to measure how many bugs (and of which severity) were found. This approach has the problem that you’d need somebody (besides testers and developers) to judge severity of bugs; and if this is not done, the metric quickly deteriorates into something as meaningless as the ‘number of lines of code’ metric for developers. The second approach only works if you have strong end-user feedback. In such a case, you can estimate both end-user satisfaction, and the percentage of bugs which reach the end-user. While formalizing it further is also not easy (and should be done on case-by-case basis), this approach provides a very reliable way to see how useful the testers’ job is for the product (and eventually for the bottom line of the company).

Summary

- If you think you don’t need a testing team – think again

- Test-driven development doesn’t mean you don’t need a testing team

- The testing team can be as small as one person

- You do not just need ‘any testing team’, you need a good one

- Organization of the testing team and its interaction with developers is all-important

- Finding good testers is as much of a challenge as finding good developers

- All these things are not easy, but do-able

- If done properly, it is worth the trouble and expense

Acknowledgement

Cartoon by Sergey Gordeev from Gordeev Animation Graphics, Prague.

References

[Levy89] Levy, L. B., & Bell, S. Y. (1989). Software product liability: understanding and minimizing the risks . High Tech. LJ, 5, 1.

[Loganberry04] David ‘Loganberry’, Frithaes! – an Introduction to Colloquial Lapine!, http://bitsnbobstones.watershipdown.org/lapine/overview.html

[NoBugs11] Sergey Ignatchenko. The Guy We’re All Working For. Overload 103.

[Techdirt11] UK Court Says Software Company Can Be Liable For Buggy Software. https://www.techdirt.com/blog/innovation/articles/20100513/0053499408.shtml