Lock free programming can be difficult. Sergey Ignatchenko shows how copy and swap can work for reactors.

Disclaimer: as usual, the opinions within this article are those of ‘No Bugs’ Hare, and do not necessarily coincide with the opinions of the translators and Overload editors; also, please keep in mind that translation difficulties from Lapine (like those described in [ Loganberry04) mi ght have prevented an exact translation. In addition, the translator and Overload expressly disclaim all responsibility from any action or inaction resulting from reading this article.

Those of you who happen to follow my ramblings, probably already know that I am a big fan of so-called (Re)Actors (see, for example, [ NoBugs10 ], [ NoBugs15 ], and [ NoBugs17 ]).

Very, very briefly, a (Re)Actor is a thing which is known under a dozen different names, including ‘Actor’, ‘Reactor’, ‘event-driven program’, and ‘ad hoc state machine’. What is most important for us now is that the logic within a (Re)Actor is inherently thread-agnostic; in other words, logic within the (Re)Actor runs without the need to know about inter-thread synchronization (just as a single-threaded program would do). This has numerous benefits: it simplifies development a lot, makes the logic deterministic and therefore testable (and determinism further enables such goodies as post-mortem production debugging and replay-based regression testing), tends to beat mutex-based multithreaded programs performance-wise, etc. etc. And in 2017, I started to feel that the Dark Ages of mutex-based thread sync were over, and that more and more opinion leaders were starting to advocate message-passing approaches in general (see, for example, [ Henney17 ] and [ Kaiser17 ]) and (Re)Actors in particular.

Next, let’s note that in spite of the aforementioned single-threaded (or, more precisely, thread-agnostic) nature of each single (Re)Actor, multiple (Re)Actors can be used to build Shared-Nothing near-perfectly-scalable multi-threaded/multi-core systems [ NoBugs17 ]. This observation has recently led me to a not-so-trivial realization that in quite a few cases, we can use (Re)Actors to… <drumroll /> implement non-blocking multithreaded primitives. The specific problem I was thinking about at that point, was a multiple-writer single-reader (MWSR) blocking-only-when-necessary queue with flow control, but I am certain the concept is applicable to a wide array of multithreaded primitives.

| Compare-and-Swap | |

|

Basic Idea – CAS (Re)Actor

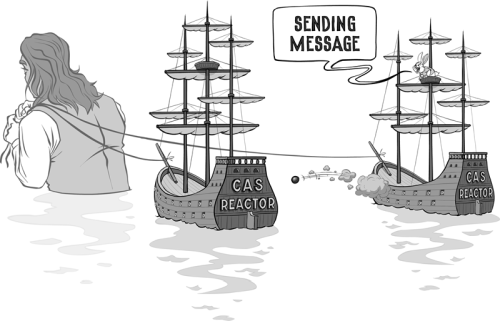

As noted above, distributed systems consisting of multiple (Re)Actors are known to work pretty well. Basically, in what I call a (Re)Actor-fest architecture, all we have is a bunch of (Re)Actors, which exchange messages with each other, with nothing more than this bunch of (Re)Actors in sight. Apparently, this model is sufficient to implement any distributed real-world system I know about (and very practically too).

Now, let’s try to use pretty much the same idea to build a multithreaded primitive (using (Re)Actors with an ultra-small state). Let’s start with the following few basic concepts:

-

We have one or more (Re)Actors

Each of these (Re)Actors has its state fitting into one CAS block (i.e. the whole state be processed within one CAS operation). Let’s call these (Re)Actors ‘CAS (Re)Actors’.

- When we’re saving the state to the CAS block, all kinds of compression are permissible, as long as we guarantee that the state always fits into one single CAS block. In particular, all kinds of bitfields are perfectly fine.

-

All interactions between (Re)Actors are implemented as message exchanges (i.e. no (Re)Actor can access another (Re)Actor’s state, except via sending a message asking to perform a certain operation).

As for the nature of messages – it depends, and in theory they can be as complicated as we wish, but in practice most of the time they will be as simple as a tuple (enum message_type, some_int_t parameter)

As soon as this is in place, we can write and use our (Re)Actors as shown in Listing 1, annotated with (a), (b), (c) and (d) to correspond with the following explanation. The logic within the infinite

while

loop with

compare_exchange_weak

inside is very standard for CAS-based primitives. First, we’re reading the data (in our case, we’re doing it in constructor). Then, we’re going into an infinite loop: (a) calculating a new value for the CAS block; (b) executing compare_exchange_weak(). If compare_exchange_weak() returns true (c), our job is done, and we can return the value. If, however,

compare_exchange_weak()

returns false, this guarantees that the CAS block wasn’t changed, so we can easily discard all our on-stack changes to bring the system to the exact state which was before we started (but with an up-to-date value for

last_data

), and try again (d). In practice, it is extremely rare to have more than 2–3 rounds within this ‘infinite’ loop, but in theory on a highly contentious CAS block, any number of iterations is possible.

using CAS=std::atomic<CAS_block>;

CAS global_cas;//accessible from multiple threads

//in practice, shouldn’t be global

//but for the example it will do

class ReactorAData { //state of our ReactorA

CAS_block data;

public:

ReactorAData() { ... }

private:

int OnEventX_mt_agnostic(int param) {

//modifies our data

//absolutely NO worries about multithreading

//here(!) MUST NOT have any side effects

//such as modifying globals etc.

//...

}

//other OnEvent*_mt_agnostic() handlers

friend class ReactorAHandle;

};

class ReactorAHandle {//’handle’ to the state of

// ReactorA

CAS* cas; //points to global_cas

ReactorAData last_read;

public:

ReactorAHandle(CAS* cas_) {

cas = cas_;

last_read = cas->load();

}

int OnEventX(int param) {

while(true) {

ReactorAData new_data = last_read;

int ret =

new_data.OnEventX_mt_agnostic(param);//(a)

bool ok = cas->compare_exchange_weak(

last_read.data, new_data.data );//(b)

if( ok )

return ret;//(c)

//(d)

}

}

//other OnEvent*() handlers

};

|

| Listing 1 |

Another way to see it is to say that what we’re doing here is an incarnation of the good old optimistic locking: we’re just trying to perform a kinda-‘transaction’ over our CAS block, with the kinda-‘transaction’ being a read-modify-write performed in an optimistic manner. If a mid-air collision (= “somebody has already modified the CAS block while we were working”) happens, it will be detected by

compare_exchange_weak()

, and – just as for any other optimistic locking – we just have to rollback our kinda-‘transaction’ and start over.

That’s pretty much it! We’ve got our multithread-safe event-handling function

OnEventX()

for

ReactorAHandle

, while our

OnEventX_mt_agnostic()

function is, well, multithread-agnostic. This means that we do NOT need to think about multithreading while writing

OnEventX_mt_agnostic()

. This alone counts as a Big Fat Improvement™ when designing correct multithreaded primitives/algorithms.

Moreover, with these mechanics in place, we can build our multithreaded primitives out of multiple (Re)Actors using the very-simple-to-follow logic of “hey, to do this operation, I – as a (Re)ActorA – have to change my own state and to send such-and-such message to another (Re)ActorB”. This effectively introduces a layer of abstraction, which tends to provide a much more manageable approach to designing multithreaded primitives/algorithms than designing them right on top of CAS (which are rather difficult to grasp, and happen to be even more difficult to get right).

Of course, as always, it is not a really a silver bullet, and there are certain caveats. In particular, two things are going to cause us trouble on the way: these are (a) a limitation on CAS block size, and (b) an ABA problem.

On CAS block size

One thing which traditionally plagues writers of multithreaded primitives is a limitation on the CAS block size. Fortunately, all modern x64 CPUs support CMPXCHG16B operations, which means that we’re speaking about 128-bit CAS blocks for our (Re)Actors. This, while not being much, happens to be not too shabby for the purposes of our extremely limited (Re)Actors.

To further help with the limitations, we can observe that (rather unusually for CAS-based stuff) we can use all kinds of bit-packing techniques within our

CAS_block

. In other words, if we have to have a field within

ReactorAData::data

, we can use as many bits as we need, and don’t need to care about alignments, byte boundaries, etc. In addition, we can (and often should) use indexes instead of pointers (which usually helps to save quite a few bits), etc. etc.

Solving the ABA problem

Another issue which almost universally rears its ugly head when speaking about not-so-trivial uses of CAS is the so-called ABA problem. Very, very roughly it is about the system being in exactly the same state under CAS, while being in a different semantic state (for examples of ABA in action, see, for example, [ Wikipedia-2 ]).

Of course, the same problem would apply to our CAS (Re)Actors too. However, apparently there is a neat workaround. If within our

ReactorAData::data

, we keep a special

ABAcounter

field as a part of our

ReactorAData::data

that is a counter of successful modifications of

ReactorAData::data

(i.e. we’ll increment this counter on each and every modification of the

ReactorAData::data

) then we’re guaranteed to avoid the ABA problem as long as the

ABAcounter

doesn’t overflow. This stands merely because for each modification we’ll get a different value of the CAS block, and therefore won’t run into ‘having the same state’ situation, ever.

Now, let’s take a look at the question of workarounds for the counter. Let’s consider a system with the CPU clock running at 3GHz, and a maximum lifetime of the running program being 10 years. Let’s also assume that CAS takes no less than 1 cycle (in practice, it takes 10+ at least for x64, but we’re being conservative here). Then, the most CAS operations we can possibly make within one single program run, is 1 CAS/cycle * 3e9 cycles/sec * 86400 sec/day * 365 days/year * 10 years ~= 1e18 CAS operations. And as 1e18 can be represented with mere 60 bits, this means that

by using a 60-bit ABA counter, we’re protected from ABA even under extremely conservative assumptions.

NB: 40–48 bit counters will be more than enough for most of practical purposes – but even a 60-bit counter is not too bad, especially as our whole allocation, as discussed above, is 128 bits (at least for x64).

Relaxing the requirement for ABAcounter modifications

As discussed above (with sufficient sizes of

ABACounter

) we can guarantee that no ABA problem occurs as long as we increment

ABAcounter

on each and every modification of our

ReactorAData::data

. However, there are cases when we can provide the same guarantees even when we skip incrementing on some of the modifications. More specifically, we can go along the following lines:

-

We divide fields within

ReactorAData::datainto two categories: (a) those fields ‘protected’ byABAcounter, and (b) those fields ‘unprotected’ byABAcounter -

Then, we still increment

ABAcounteron any modification to ‘protected’ fields, but are not required to incrementABAcounteron those modifications touching only ‘unprotected’ fields -

Then, we’re still providing ‘no-ABA-problem’ guarantees as long as all our ‘unprotected’ fields have the property that the same value of those ‘unprotected’ fields is guaranteed to have the same semantic meaning.

-

For example, if we have a ‘number of current locks’ field within our

ReactorAData::data– for most of the typical usage patterns, we don’t really care why this field got this value, but care only about its current value; this means that whatever we’re doing with this field, it is ABA-free even without theABAcounter, so it can be left ‘unprotected’.

-

For example, if we have a ‘number of current locks’ field within our

Conclusions and Ongoing Work

We presented a hopefully novel way for building of non-blocking multithreaded primitives and algorithms, based on ‘CAS (Re)Actors’ (essentially – (Re)Actors with the size fitting into one CAS block).

This approach is practically interesting because it provides an additional layer of abstraction, and – as a result – allows us to reason about multithreaded primitives/algorithms in terms which don’t involve multithreading (in particular, such issues as the semantics of CAS and the ABA problem are out of the picture completely). Instead, the reasoning can be done in terms of distributed systems (more specifically – in terms of Actors, Reactors, event-driven programs, or ad hoc finite state machines). This, in turn, is expected to enable composing of more complicated primitives/algorithms than it is currently possible. In particular, the author is currently working on a MWSR queue with locking-only-when-necessary and providing different means of flow control; when the work is completed he hopes to present that in Overload too.

References

[Henney17] Kevlin Henney, Thinking Outside the Synchronisation Quadrant, ACCU2017

[Kaiser17] Hartmut Kaiser, The Asynchronous C++ Parallel Programming Model, CPPCON2017

[Loganberry04] David ‘Loganberry’, Frithaes! – an Introduction to Colloquial Lapine!, http://bitsnbobstones.watershipdown.org/lapine/overview.html

[NoBugs10] ‘No Bugs’ Hare, Single-Threading: Back to the Future?, Overload #97, 2010

[NoBugs15] ‘No Bugs’ Hare, Client-Side. On Debugging Distributed Systems, Deterministic Logic, and Finite State Machines, http://ithare.com/chapter-vc-modular-architecture-client-side-on-debugging-distributed-systems-deterministic-logic-and-finite-state-machines/

[NoBugs17] ‘No Bugs’ Hare, Development and Deployment of Multiplayer Online Games, Vol. II.

[Wikipedia-1] Wikipedia, Compare-and-Swap, https://en.wikipedia.org/wiki/Compare-and-swap

[Wikipedia-2] Wikipedia, ABA problem. https://en.wikipedia.org/wiki/ABA_problem

Acknowledgement

Cartoon by Sergey Gordeev from Gordeev Animation Graphics, Prague